In Part 2, we take a look at how Lean IT can be applied to make significant improvements to data quality, reducing the wasteful rework associated with incomplete and inaccurate information. See Part 1 here.

For a practical example of Lean IT and data management, see the webinar Lean IT: Driving SAP Continual Process Improvement.

How Lean IT Can Help

Lean IT is a framework for deeply understanding Information and Technology in a new light through applying the principles and methods of lean. Lean is all about creating excellence in the workplace, in the work, and in the quality of information. Bad data produces inaction-able information, which leads to errors in judgment and behavior.

If there is a chronic lack of high-quality information, it’s impossible to sustain a smooth flow of work because fixing mistakes channels precious energy and mental capacity from your employees.

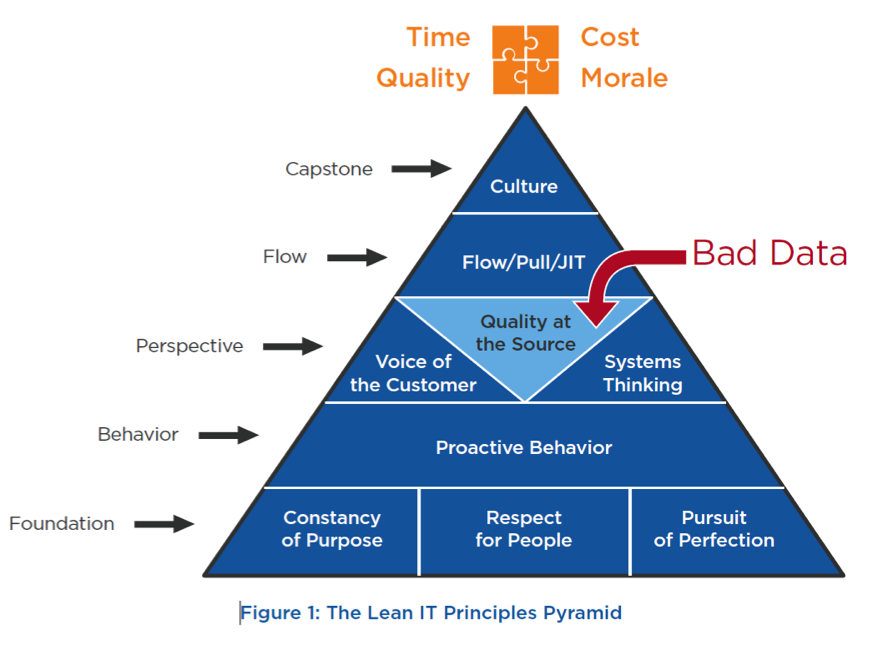

The illustration above is the principles pyramid developed while writing the book Lean IT. At its core, lean IT is about leveraging technology to deliver customer value with the least amount of effort required. In order to achieve this, we remove all unnecessary effort – ambiguity of process, avoidable mistakes, self-inflicted variation, corrections, rework, delays, and extra steps. For the purpose of this discussion, let’s focus on the principle of Quality at the Source.

Quality at the source means performing work right the first time, every time. Imperfect work (work that is incomplete or inaccurate) is never sent forward to the next operation, end users, or customers. We measure quality in terms of percentage of work that is accurate, complete, timely, and accessible (as defined by end users and customers). The critical nature of the quality of information is well known. We’re all familiar with the truism “Garbage in, garbage out!” Without quality information, the results will always be inferior and require heroic efforts and creative rework to meet customer expectations.

The essential factor of quality information is quality data. It is amazing that many, if not most organizations, focus the majority of their effort and resources on technology while paying very little attention to the quality of data within the system. You can have state-of-the-art hardware, application stack, network, connectivity, and security, but if you have data issues, the result is in-actionable information received by end users, confusion, frustration, errors, workarounds, and the accompanying pain as a result. This wasted, non-value added effort and annoyance only gets worse over time. Why? Because once people no longer trust the system, they adapt skillfully to perform their job outside of the system to get their work done!

The Promise of ERP

Today, complex business enterprises are connected and managed through integrated information systems like SAP. Enterprise Resource Planning (ERP) has been around since the 80s and is both a blessing and a curse. The promise of an integrated system with a cohesive database that creates a single rock-solid record of “the truth” has been the vision of ERP systems since their inception. ERP is the ultimate connective tissue of the enterprise, enabling disparate silos of the organization to work towards common objectives, access information, maintain accurate records, and share information. Imagine trying to run a modern corporation without technology!

When actionable information is missing or unavailable, it is often hard to detect. People tend to rely on what the system tells them and often only discover that information is inaccurate and incomplete after the fact by hearing about a problem from downstream operations, end users, or worst-case scenario – their customers.

Data Quality, ERP, and Respect for People

In lean, respect for people refers to management’s responsibility to create a work environment where people are positioned to be successful and grow to their full capabilities. It means creating a workplace where everyone has the tools, processes, and information they need to do great work. It also means creating a culture where uncertainty and ambiguity are actively eradicated, while transparency and trust are intentionally fostered. Knowing your ERP system is housing bad data and not doing anything about it is the antithesis of respect for people, and sends a clear message to all that management places a higher priority on other things.

If poor information quality becomes a chronic issue within ERP, users lose trust in the system and rely on ingenuity to get what they need to complete their work. Spreadsheets, stand-alone databases, pay-per user cloud-based apps, in-house solutions developed outside of IT, and other inventive efforts by users add new layers of anonymous technology in the shadows of the ERP system. This creates a black market of information beyond the integration, security, and scrutiny of the IT department! The technical debt associated with shadow IT systems accumulates over time, crippling an organization’s ability to respond to complex performance issues, and blocks straightforward upgrades to system functionality.

As the sanctioned ERP system goes through controlled releases of functional modules and upgrades, informal, unauthorized shadow IT systems proliferate spontaneously at a very rapid pace driven by user needs, the bureaucracy of IT, and the distrust of ERP.

In my next post, we’ll go more deeply into how Lean IT effectively comes to grip with bad data by eliminating its creation at its origin.